See No Evil, Hear No Evil? How Deepfaked Identities Finagle Money from Banks

Customer or AI-Generated Identity? The lines are as blurry as ever.

Customer or AI-Generated Identity? The lines are as blurry as ever.

Today’s fraudsters are truly, madly, deeply fake.

Deepfaked identities, which use AI-generated audio or visuals to pass for a legitimate customer, are multiplying at an alarming rate. Banks and other fintech companies—who collectively lost nearly $2 billion to bank transfer or payment fraud in 2022, are firmly in their crosshairs.

Sniffing out deepfaked chicanery isn’t easy. One study found that 43% of people struggle to identify a deepfaked video. It’s especially concerning that this technology is still relatively infantile and already capable of luring consumers and businesses into fraudulent transactions.

Over time, deepfakes will seem increasingly less fake and much harder to detect. In fact, an offshoot of deepfaked synthetic identities, the SuperSynthetic™ identity, has already emerged from the pack. Banks and financial organizations have no choice but to stay on top of developments in deepfake technology and swiftly adopt a solution to combat this unprecedented threat.

Rise of the deepfakes

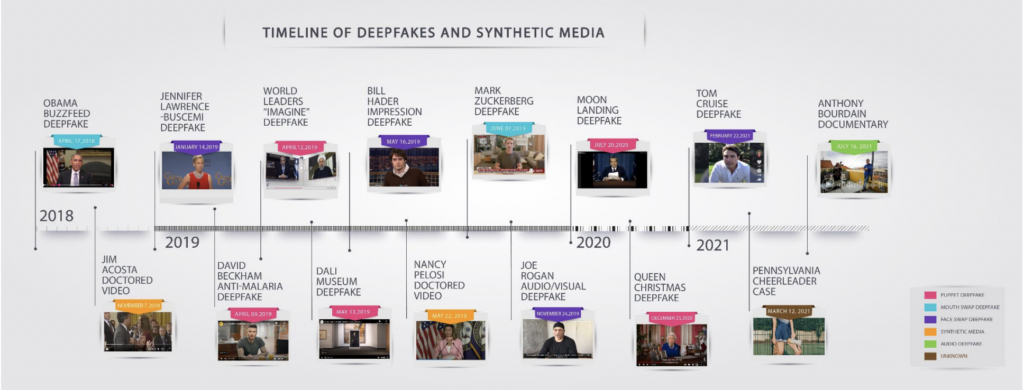

Deepfakes have come a long way since exploding onto the scene roughly five years ago. Back then, deepfaked videos aimed to entertain. Most featured harmless superimpositions of one celebrity’s face onto another, such as this viral Jennifer Lawrence-Steve Buscemi mashup.

The trouble started when users began deepfaking sexually explicit videos, opening up a massive can of privacy- and ethics-related worms. Then a 2018 video of a deepfaked Barack Obama speech showed just how dangerous the technology could be.

The proliferation and growing sophistication of deepfakes over the past five years can be attributed to the democratization of AI and deep learning tools. Today, anyone can doctor an image or video with just a few taps. FakeApp and Lyrebird and countless other apps enable smartphone users to seamlessly integrate someone’s face into an existing video, or generate a new video that can easily pass for the real deal.

Given this degree of accessibility, the threat of deepfakes to banks and fintech companies will only intensify in the months and years ahead. The specter of new account fraud, perpetrated by way of a deepfaked synthetic identity, looms large in the era of remote customer onboarding.

This is a stickup

Synthetic identity fraud, in which bad actors invent a new identity using a combination of stolen and made-up credentials, has already cost banks upwards of $6 billion. Deepfake technology only adds fuel to the fire.

A deepfaked synthetic identity heist doesn’t require any heavy lifting. A fraudster crops someone’s face from a social media picture and they’re well on their way to spawning a lifelike entity that speaks, blinks, and moves its head on screen. Image- or video-based identity verification, KYC protocol designed to deter potential fraud before an account is opened or extended credit, is moot. The fraudster’s uploaded selfie will be a dead ringer for the face on the ID card. Even a live video conversation with an agent is unlikely to ferret out a deepfaked identity.

Audio-based verification processes are circumvented just as easily. Exhibit A: the vulnerability of the voice ID technology used by banks across the US and Europe, ostensibly another layer of login security that prompts users to say some iteration of, “My voice is my password.” This sounds great in theory, but AI-generated audio solutions can clone anyone’s voice and create a virtually identical replica. One user, for example, tapped voice creation tool ElevenLabs to clone his own voice using an audio sample. He accessed his account in one try.

In this use case, the bad actor would also need a date of birth to access the account. But, thanks to frequent big-time data leaks—such as the recent Progress Corp breach—dates of birth and other Personally Identifiable Information (PII) are readily available on the dark web.

Here come the SuperSynthetics

In deepfaked synthetic identities, banks and financial services platforms clearly face a formidable foe. But this worthy opponent has been in the gym, protein-shaking and bodyhacking itself into something stronger and infinitely more dangerous: the SuperSynthetic identity.

SuperSynthetic identities, armed with the same deepfake capabilities as regular synthetics (and then some), bring an even greater level of Gen AI-powered smarts to the table. No need for a brute force attack. SuperSynthetics operate with a sophistication and discernment that is so lifelike it’s spooky. In this regard, one must only look at the patience of these bots.

SuperSynthetics are all about the long con. Their aged and geo-located identities play nice for months, engaging with the website and making small deposits here and there, enough to appear human and innocuous. Once enough of these transactions accumulate, and trust is gained from the bank, a credit card or loan is extended. Any additional verification is bypassed via deepfake, of course. When the money is deposited into their SuperSynthetic account the bad actor immediately withdraws it, along with their seed money, before finding another bank to swindle.

How prevalent are SuperSynthetics? Deduce estimates that between 3-5% of financial services accounts onboarded within the past year are in fact SuperSynthetic “sleepers” waiting to strike. It certainly warrants a second look at how customers are verified before obtaining a loan or credit card, including the consideration of in-person verification to rule out any deepfake activity.

No time like the present

If deepfaked synthetic identities don’t call for a revamped cybersecurity solution, deepfaked SuperSynthetic identities will certainly do the trick. Our money is on a top-down approach that views synthetic identities collectively rather than individually. Analyzing synthetics as a group uncovers their digital footprints—signature online behaviors and patterns too consistent to suggest mere coincidence.

Whatever banks choose to do, kicking the can down the road only works in favor of the fraudsters. With every passing second, the deepfakes are looking (and sounding) more real.

Time is a-tickin’, money is a-burnin, and customers are a-churnin’.