Identity Verification Has a Deepfake Problem

An old-school approach could be the answer for finservs

An old-school approach could be the answer for finservs

For many people, video conferencing apps like Zoom made work, school, and other everyday activities possible amid the global pandemic—and more convenient. Remote workers commuted from sleeping position to upright position. Business meetings resembled “Hollywood Squares.” Business-casual meant a collared shirt up top and pajama pants down low.

Fraudsters were also quite comfortable during this time. Unprecedented amounts of people sheltering in place naturally caused an ungodly surge in online traffic and a corresponding increase in security breaches. Users were easy prey, and so were many of the apps and companies they transacted with.

In the financial services (finserv) sector, branches closed down and ceased face-to-face customer service. Finserv companies relied on Zoom for document verification and manual reviews, and bad actors, armed with stolen credentials and improved deepfake technology, took full advantage.

Even in the face of AI-Generated identity fraud most finservs still use remote identity verification to comply with regulator KYC requirements, and when it comes time to offer a loan. It’s easier than meeting in person, and what customer doesn’t prefer verifying their identity from the comfort of their couch?

But AI-powered synthetic identities are getting smarter and, while deepfake deterrents are closing the gap, a return to an old-school approach remains the only foolproof option for finservs.

Deepfakes, and the SuperSynthetic™ quandary

Gen AI platforms such as ChatGPT and Bard, coupled with their nefarious brethren FraudGPT and WormGPT and the like, are so accessible it’s scary. Everyday users can create realistic, deepfaked images and videos with little effort. Voices can be cloned and manipulated to say anything and sound like anyone. The rampant spread of misinformation across social media isn’t surprising given that nearly half of people can’t identify a deepfaked video.

Finserv companies are especially susceptible to deepfaked trickery, and bypassing remote identity verification will only get easier as deepfake technology continues to rapidly improve.

For SuperSynthetics, the new generation of fraudulent deepfaked identities, fooling finservs is quite easy. SuperSynthetics—a one-two-three punch of deepfake technology and synthetic identity fraud and legitimate credit histories—are more humanlike and individualistic than any previous iteration of bot. The bad actors who deploy these SuperSynthetic bots aren’t in a rush; they’re willing to play the long game, depositing small amounts of money over time and interacting with the website to convince finservs they’re prime candidates for a loan or credit application.

When it comes time for the identity verification phase, SuperSynthetics deepfake their documents, selfie, and/or video interview…and they’re in.

An overhaul is in order

Deepfake technology, which first entered the mainstream in 2018, is still relatively infantile yet pokes plenty of holes in remote identity verification.

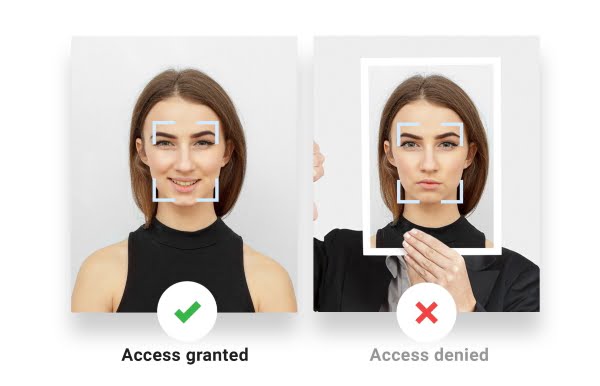

The “ID plus selfie” process, as Gartner analyst Akif Khan calls it, is how most finservs are verifying loan and credit applicants these days. The user takes a picture of their ID or driver’s license, authenticity is confirmed, then the user snaps a picture of themselves. The system checks the selfie for liveness and makes sure the biometrics line up with the photo ID document. Done.

The process is convenient for legitimate customers and fraudsters alike thanks to the growing availability of free deepfake apps. Using these free tools, fraudsters can deepfake images of docs and successfully pass the selfie step, most commonly by executing a “presentation attack” in which their primary device’s camera is aimed at the screen of a second device displaying a deepfake.

Khan advocates for a layered approach to deepfake mitigation, including tools that detect liveness and check for certain types of metadata. This is certainly on point, but there’s an old-school, far less technical way to ward off deepfaking fraudsters. Its success rate? 100%.

The good ol’ days

Remember handshakes? How about eye contact that didn’t involve staring into a camera lens? These are merely vestiges of the bygone in-person meetings that many finservs used to hold with loan applicants pre-COVID.

Outdated, and less efficient, as face-to-face meetings with customers might be, they’re also the only rock-solid defense against deepfakes.

Sure, the upper crust of finserv companies likely have state-of-the-art deepfake deterrents in place (i.e., 3D liveness detection solutions). But liveness detection doesn’t account for deepfaked documents or, more importantly, video, or the fact that the generative AI tools available to fraudsters are advancing just as fast as vendor solutions, if not faster. It’s a full-blown AI arms race, and with it comes a lot of question marks.

In-person verification (only for high-risk activities) puts these fears to bed. Is it frictionless? Obviously far from it, though workarounds, such as traveling notaries that meet customers at their residence, help ease the burden. But if heading down to a local branch for a quick meet-and-greet is what it takes to snag a $10K loan, will a customer care? They’d probably fly across state lines if it meant renting a nicer apartment or finally moving on from their decrepit Volvo.

Time to layer up

Khan’s recommendation, for finservs to assemble a superteam of anti-deepfake solutions, is sound, so long as companies can afford to do so and can figure out how to orchestrate the many solutions into a frictionless consumer experience. Vendors indeed have access to AI in their own right, powering tools that directly identify deepfakes through patterns, or that key in on metadata such as the resolution of a selfie. Combine these with the most crucial layer, liveness detection, and the final result is a stack that can at the very least compete against deepfakes.

SuperSynthetics aren’t as easy to neutralize. In previous posts, we’ve advocated for a “top-down” anti-fraud solution that spots these types of identities before the loan or credit application stage. Contrary to individualistic fraud prevention tools, this bird’s-eye view reveals digital fingerprints—concurrent account activities, simultaneous social media posts, etc.—that otherwise would go undetected.

In the meantime, it doesn’t hurt to consider the upside of an in-person approach to verifying customer identities (prior to extending a loan, not onboarding). No, it isn’t flashy, nor is it flawless. However, it is reliable and, if finservs effectively articulate the benefit to their customers—protecting them from life-altering fraud—chances are they’ll understand.